In this project we developed an automated data preparation system to improve Torah reading recognition with cantillation (te'amim). The main challenge was to adapt existing datasets, which typically consist of long recordings, to the requirements of training models like Whisper, which necessitate short audio segments (under 30 seconds).

The developed system includes: VAD-based audio segmentation, initial transcription using Whisper, and a unique iterative text alignment mechanism bridging the gap between modern transcription and biblical text. Additionally, tools were created for automated data collection from YouTube, utilizing an LLM for Parasha/Aliyah identification.

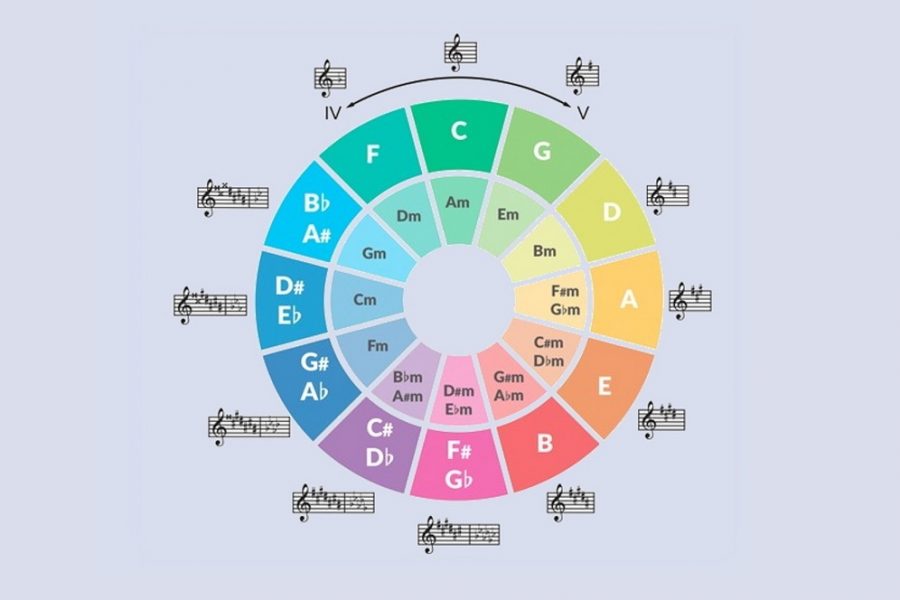

Symbian Guitar Tuner

Symbian Guitar Tuner

Automatic Transcription of Piano Polyphonic Music

Automatic Transcription of Piano Polyphonic Music

MP3 Robust Digital Watermark

MP3 Robust Digital Watermark

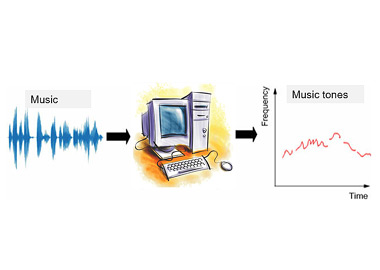

Automatic Transcription of Music

Automatic Transcription of Music

Ancillary Instrument for Piano Tuning

Ancillary Instrument for Piano Tuning