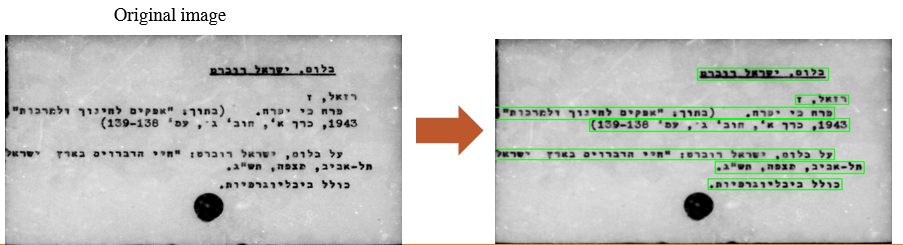

Joseph Yerushalmi, a librarian at the University of Haifa Library, created a catalogue with around 65,000 records on paper cards. The catalogue contains articles from the 1940s to the 1970s, focusing on individuals like artists, writers, philosophers, intellectuals, and historical figures. the collection also includes reviews on books and literary works.

To preserve this valuable catalogue, digitization is needed, the project is divided to two parts:

The first part is to Detect text regions, which means classifying each region to its appropriate label: Title, Author, Text, and other.

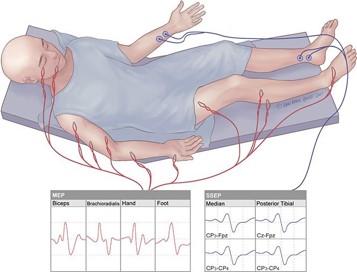

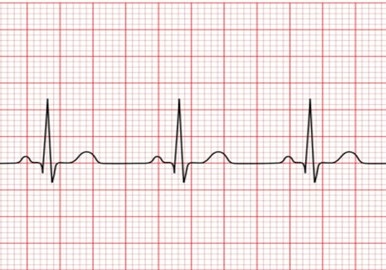

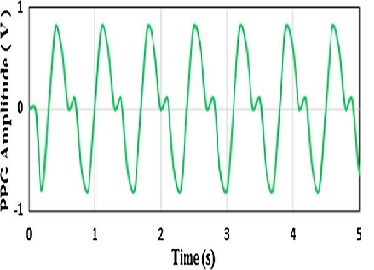

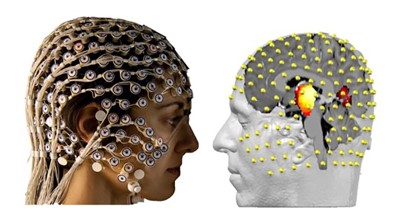

Classification of SSEP Signals for Surgical Monitoring

Classification of SSEP Signals for Surgical Monitoring

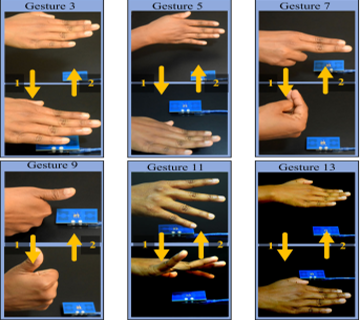

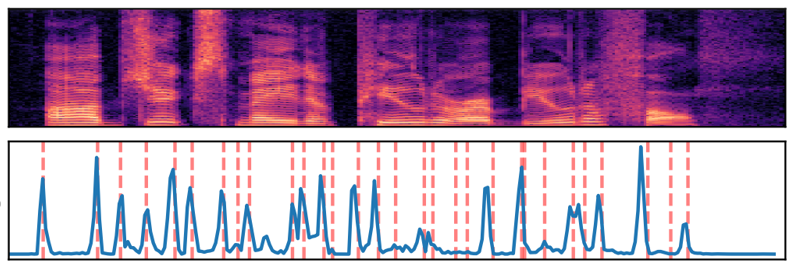

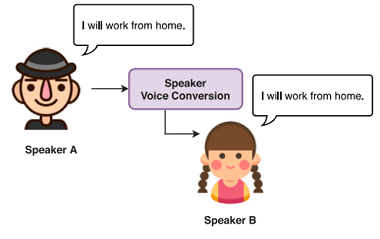

How Does a Lexical Stress Look like?

How Does a Lexical Stress Look like?

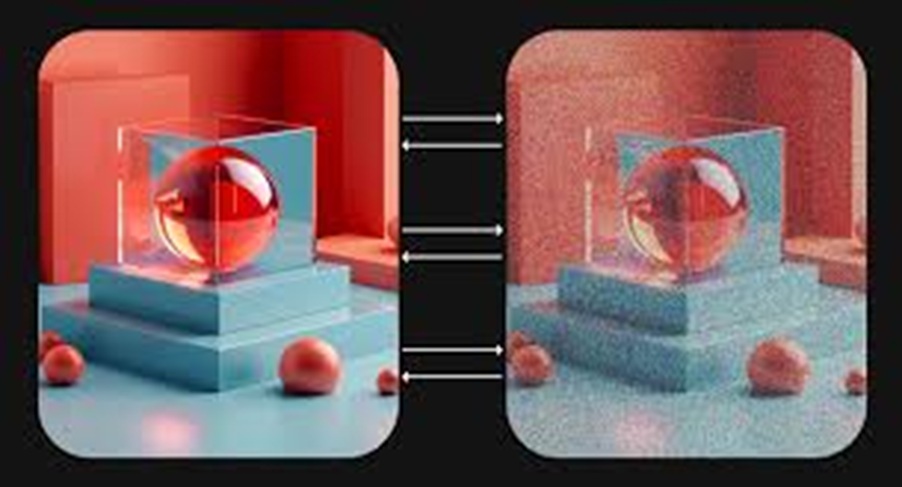

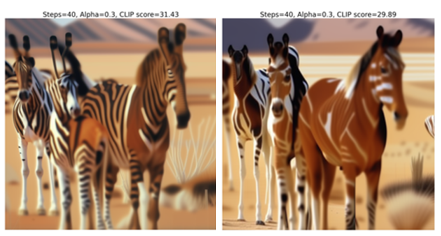

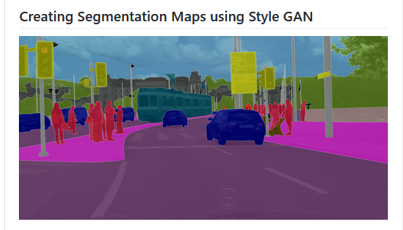

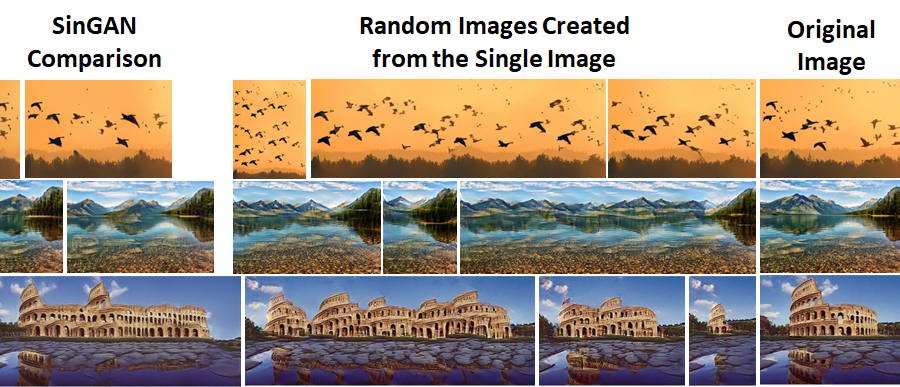

Image Manipulation with GANs Spatial Control

Image Manipulation with GANs Spatial Control

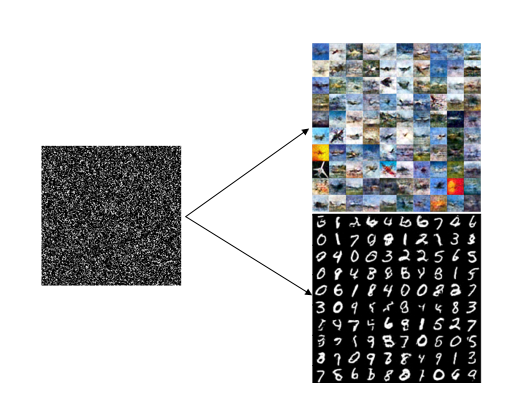

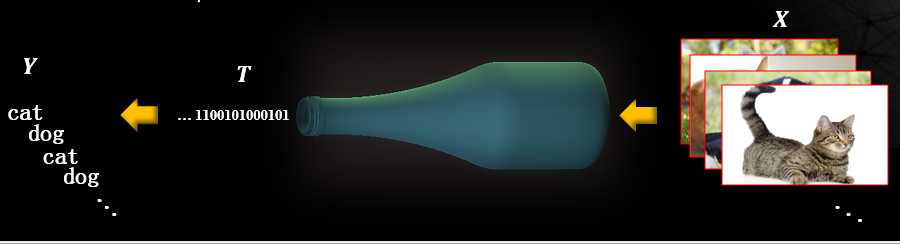

Generative Deep Features

Generative Deep Features

Gunshot Detection in Video Games

Gunshot Detection in Video Games

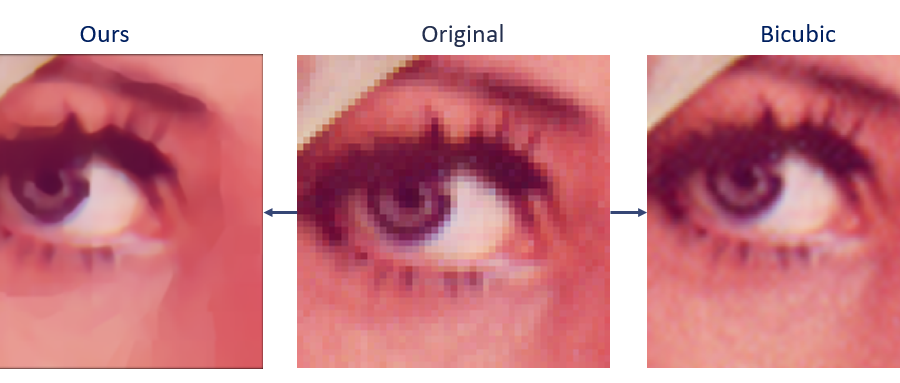

Deep Image Interpolation

Deep Image Interpolation

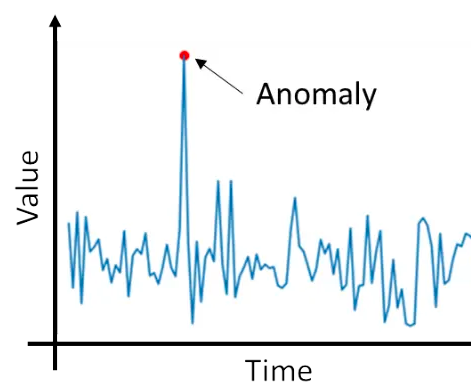

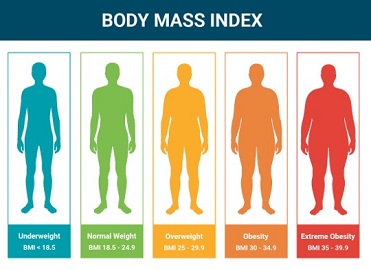

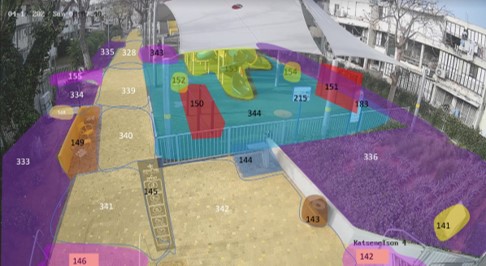

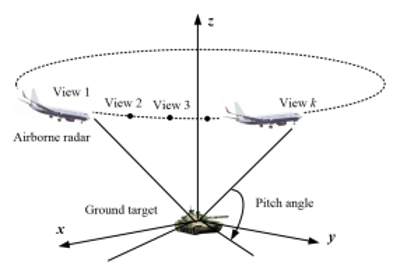

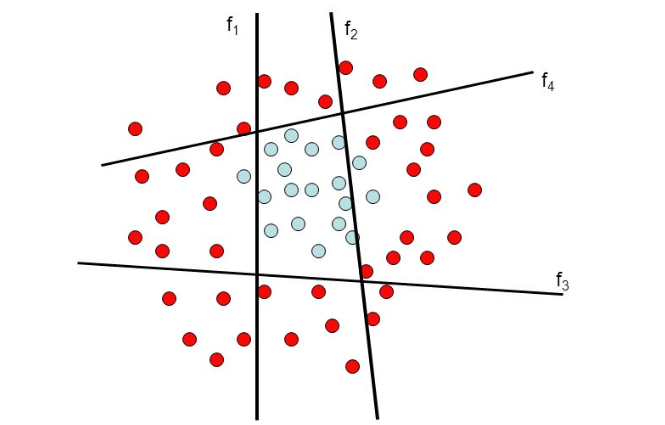

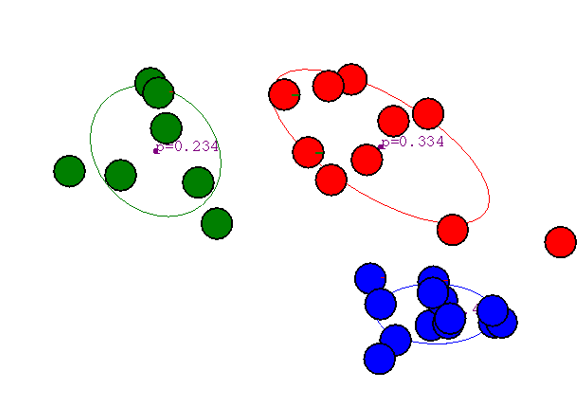

Unsupervised Abnormality Detection by Using Intelligent and Heterogeneous Autonomous Systems

Unsupervised Abnormality Detection by Using Intelligent and Heterogeneous Autonomous Systems

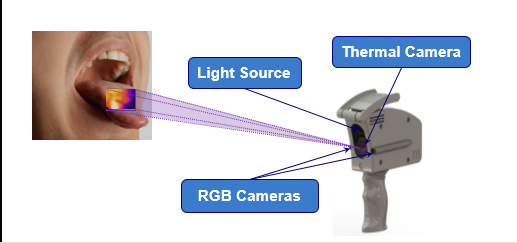

Early Detection of Cancer Using Thermal Video Analysis

Early Detection of Cancer Using Thermal Video Analysis

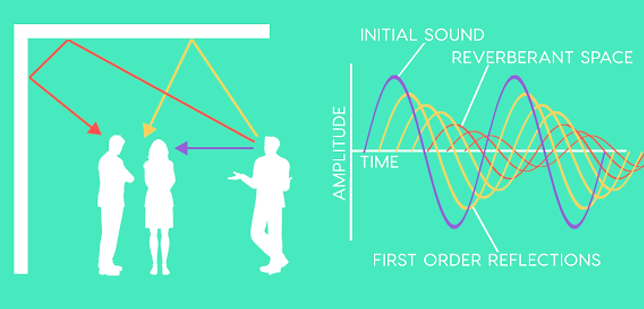

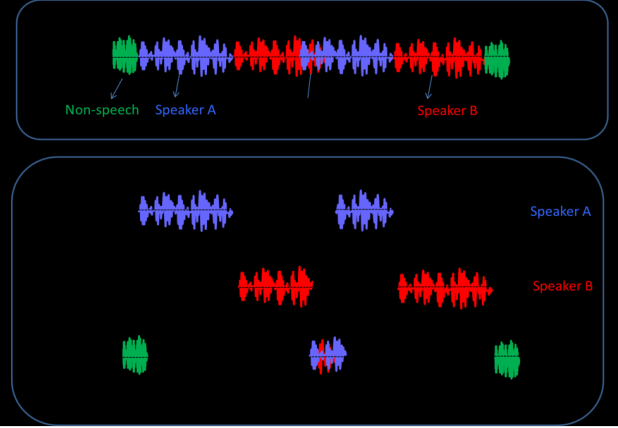

Speaker Diarization using Deep Learning

Speaker Diarization using Deep Learning

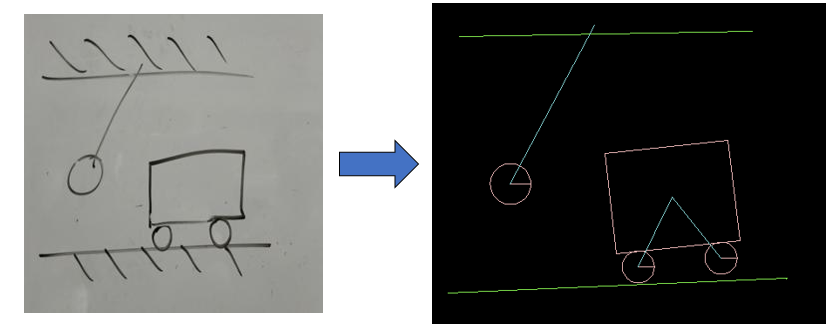

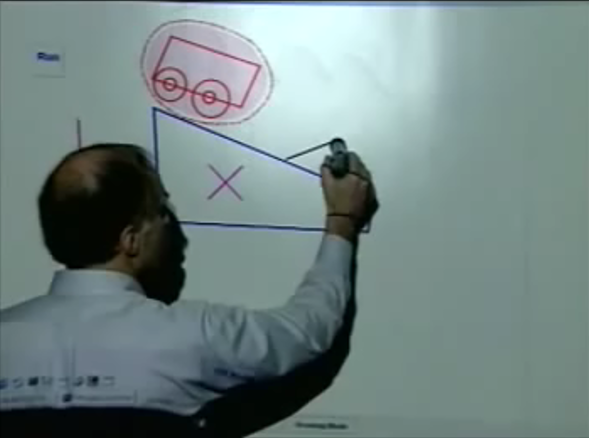

Physics Classroom Augmented Reality with Your Smartphone Part B

Physics Classroom Augmented Reality with Your Smartphone Part B

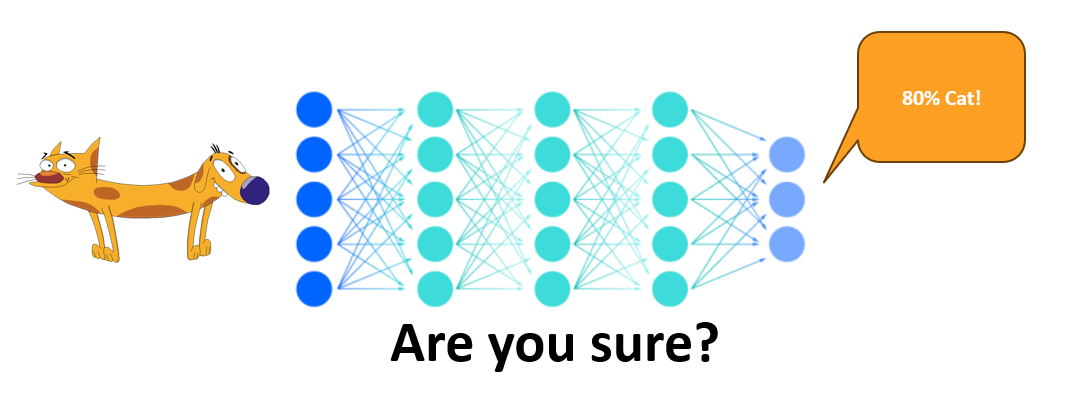

Deep Learning for Physics Classroom Augmented Reality App

Deep Learning for Physics Classroom Augmented Reality App

Efficient Deep Learning for Pedestrian Traffic Light Recognition

Efficient Deep Learning for Pedestrian Traffic Light Recognition

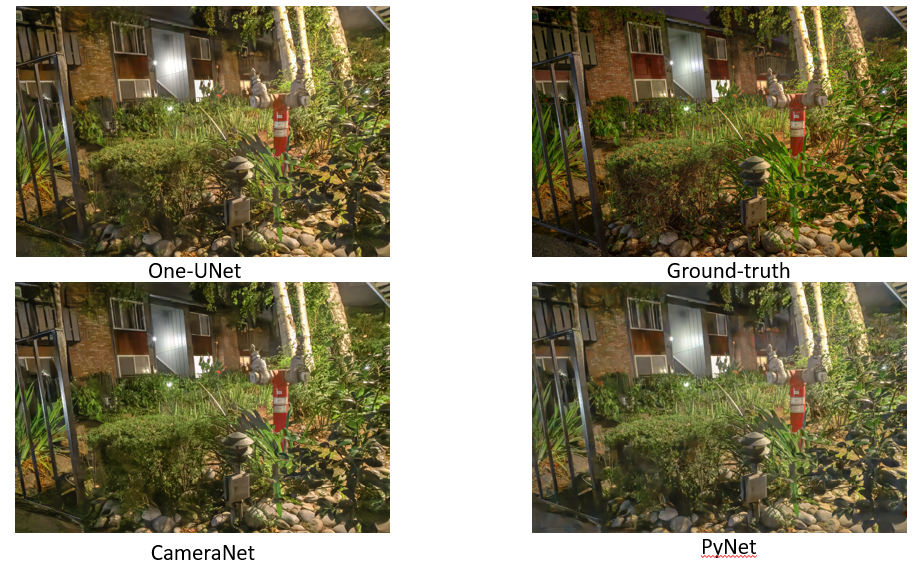

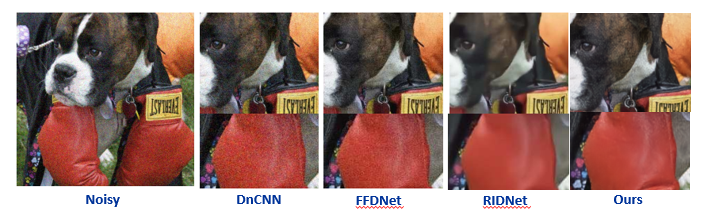

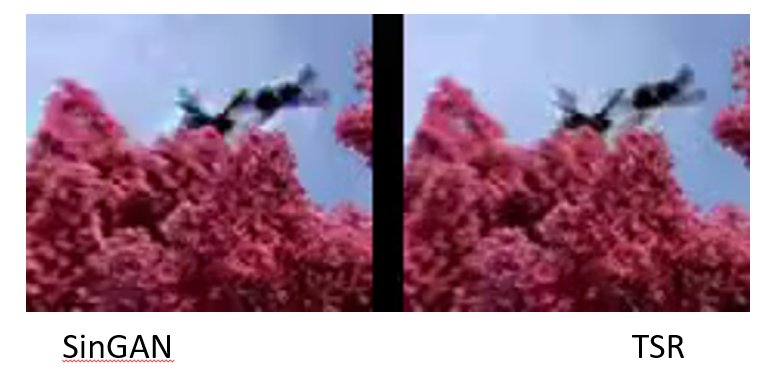

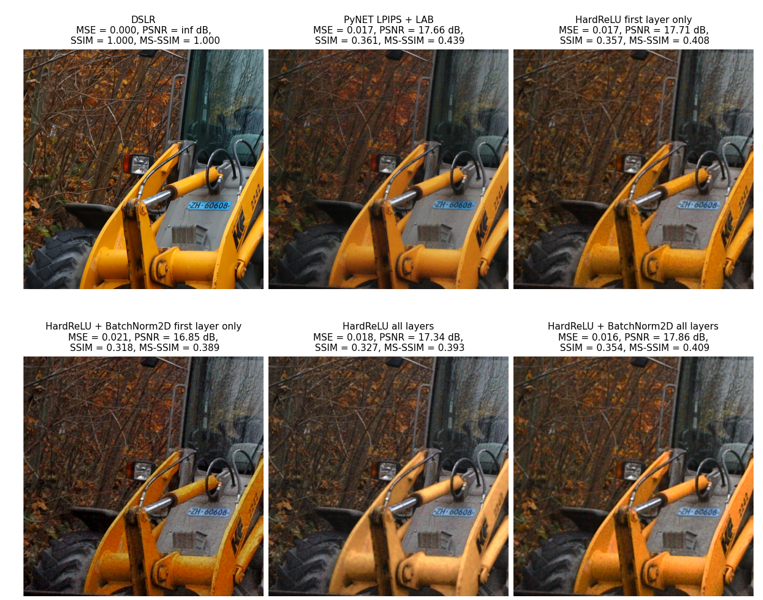

From Deep Features To Image Restoration

From Deep Features To Image Restoration

Advanced Framework For Deep Reinforcement Learning

Advanced Framework For Deep Reinforcement Learning