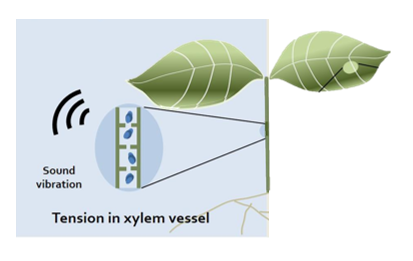

This work explores the classification of plant-emitted ultrasonic sounds using machine learning, adapting speech-processing models to a new biological domain. The dataset, provided by Tel Aviv University, consists of 16,000 samples of 2ms audio recordings (sampled at 500 kHz) from plant species, including tomato, corn, cacti, wheat, lamium, tobacco, and grapevine. These recordings included conditions like “cut” and “dry,” and served as a basis for species and condition classification.

We aim to boost classification performance (evaluated using F1 and accuracy metrics) using advanced model architectures and self-supervised (SS) representations.

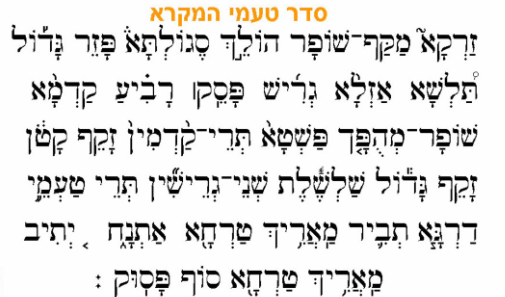

How Does a Lexical Stress Look like?

How Does a Lexical Stress Look like?

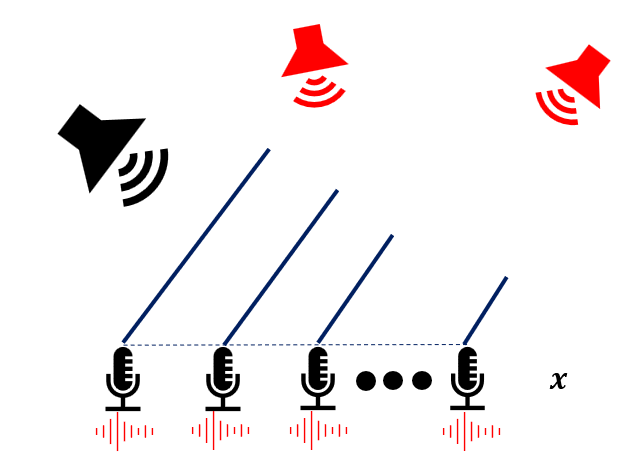

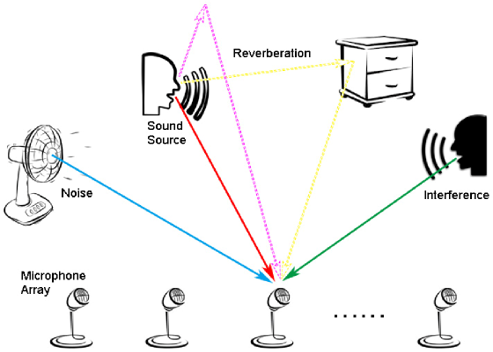

RTF Estimation Using Riemannian Geometry for Speech Enhancement in the Presence of Interferences

RTF Estimation Using Riemannian Geometry for Speech Enhancement in the Presence of Interferences

Speaker Localization Inside a Car Using a Microphone Array

Speaker Localization Inside a Car Using a Microphone Array

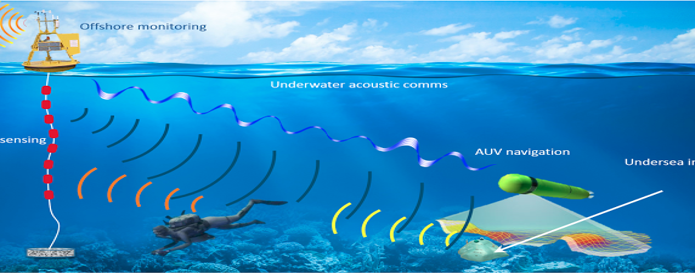

Iterative adaptive estimation of underwater channel transfer function based on soft information using turbo equalization

Iterative adaptive estimation of underwater channel transfer function based on soft information using turbo equalization

Proximity Sensor for Smartphones based on Acoustic Measurements

Proximity Sensor for Smartphones based on Acoustic Measurements

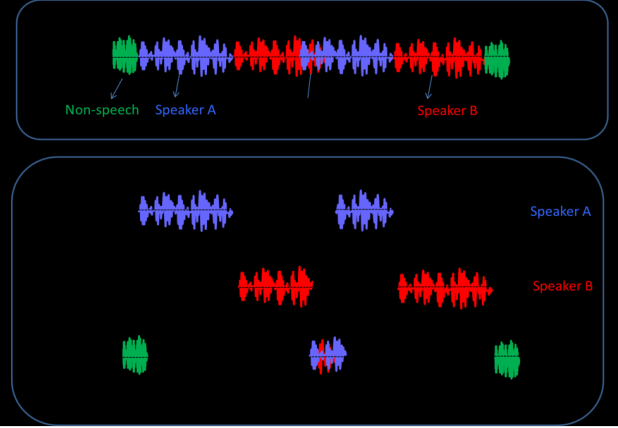

Speaker Diarization using Deep Learning

Speaker Diarization using Deep Learning

Robust Automatic Detector And Feature Extractor For Dolphin Whistles

Robust Automatic Detector And Feature Extractor For Dolphin Whistles

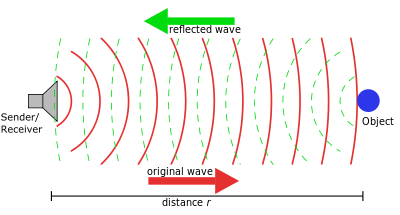

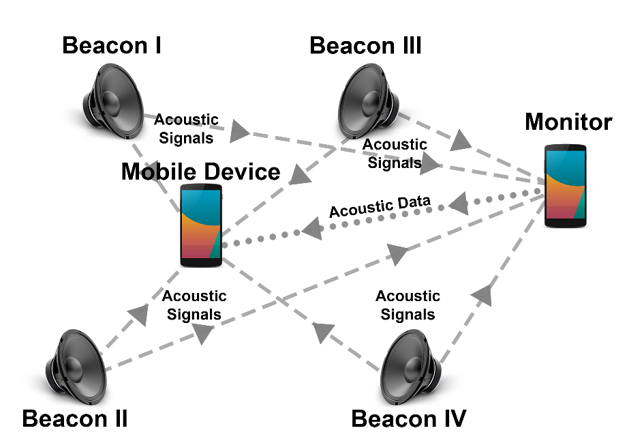

Acoustic positioning with unsynchronized sound sources

Acoustic positioning with unsynchronized sound sources

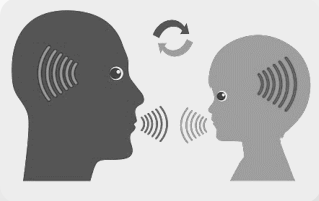

Audio retrieval by voice imitation

Audio retrieval by voice imitation

Siren Detecction Algorithm in Noisy Environment for The Hearing Impared

Siren Detecction Algorithm in Noisy Environment for The Hearing Impared

Audio QR Over Streaming Media

Audio QR Over Streaming Media

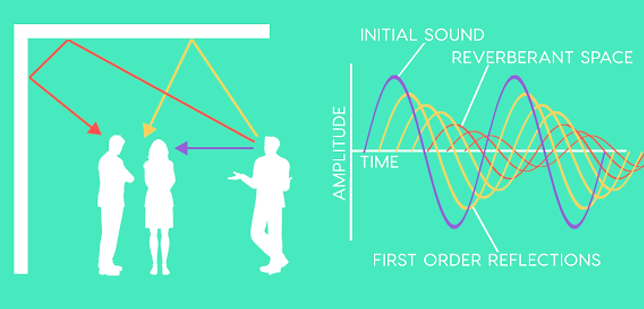

Non-Coherent Multichannel Speech Enhancement in Non-Stationary Noise Environments

Non-Coherent Multichannel Speech Enhancement in Non-Stationary Noise Environments

People Metering Using Mobile Devices

People Metering Using Mobile Devices

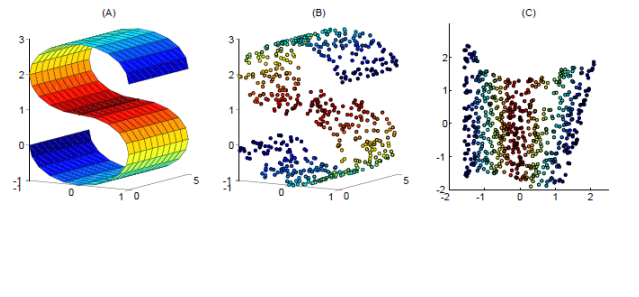

Temporal Decomposition of Speech

Temporal Decomposition of Speech

Speech Bandwidth Extension

Speech Bandwidth Extension

Detection of Spectral Signature in SONAR Signal, Part A+B

Detection of Spectral Signature in SONAR Signal, Part A+B

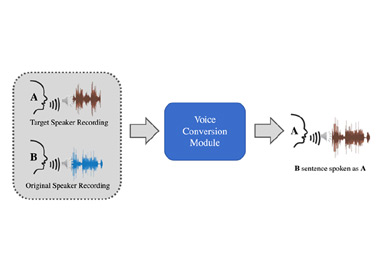

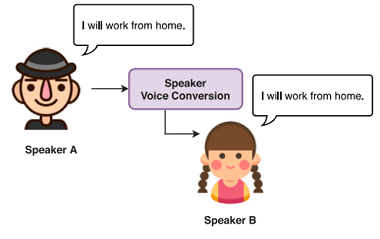

Voice Morphing

Voice Morphing

HMM Based Speech Recognition System

HMM Based Speech Recognition System

Real-Time Digital Watermarking System for Audio Signals Using Perceptual Masking

Real-Time Digital Watermarking System for Audio Signals Using Perceptual Masking

Development of a New Algorithm for Voice Modification

Development of a New Algorithm for Voice Modification

Real-Time Embedding of Digital Watermarking for Audio Signal

Real-Time Embedding of Digital Watermarking for Audio Signal

Real Time Implementation of Low Bit-Rate Speech Compression on Sharc DSP

Real Time Implementation of Low Bit-Rate Speech Compression on Sharc DSP

Widening of Band-Width in Telephony

Widening of Band-Width in Telephony