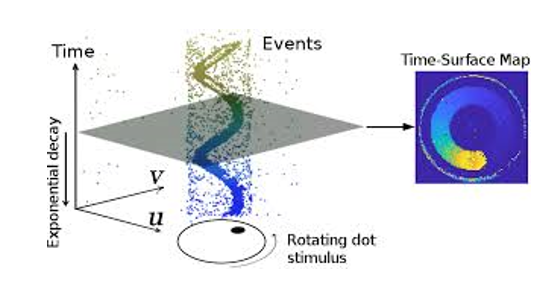

Denoising for Event Cameras

Denoising for Event Cameras

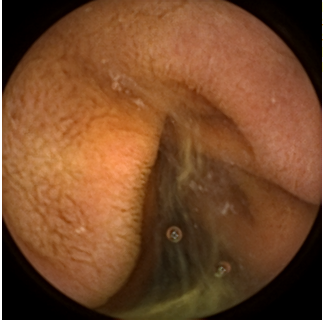

Estimating Intestine Blood Flow With Video Analysis

Estimating Intestine Blood Flow With Video Analysis

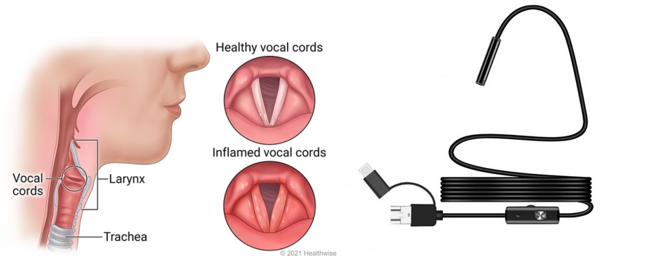

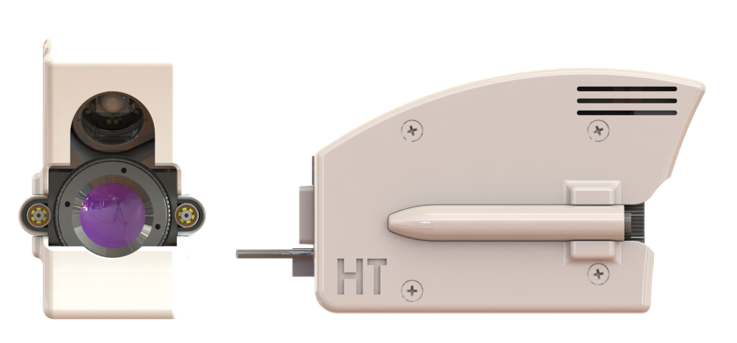

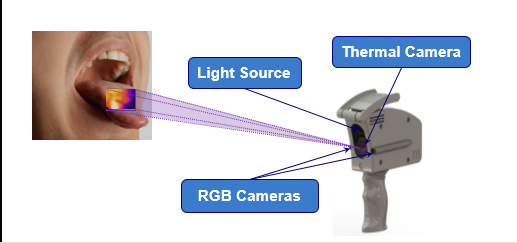

Motorized Thermal Camera Slider for Oral Cancer Detection

Motorized Thermal Camera Slider for Oral Cancer Detection

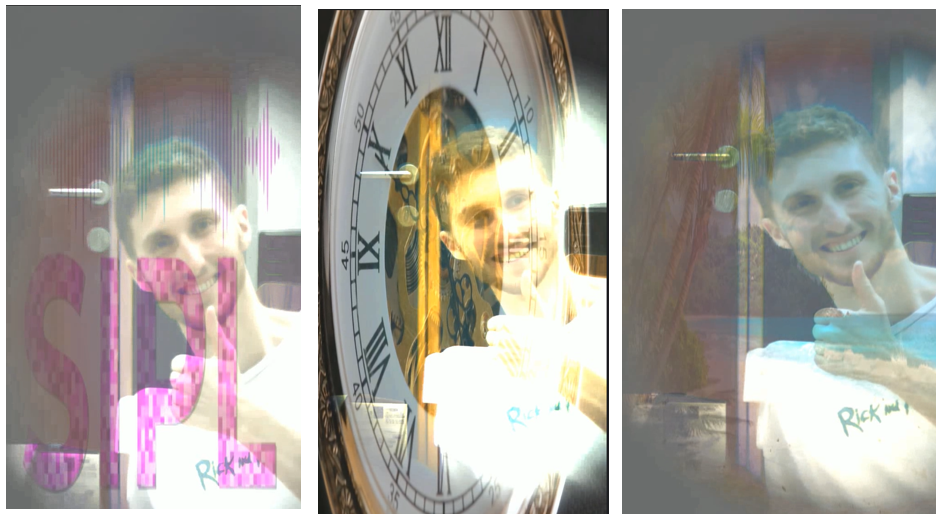

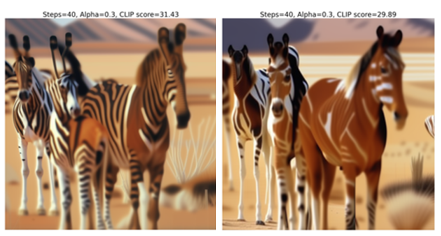

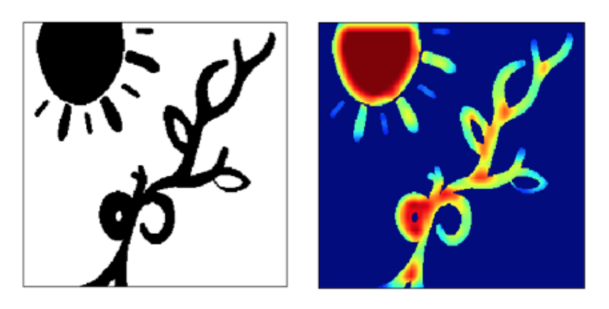

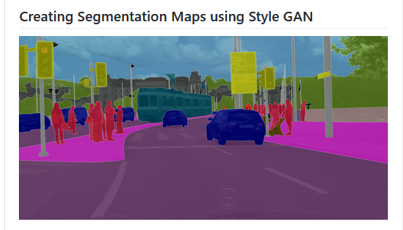

Image Manipulation with GANs Spatial Control

Image Manipulation with GANs Spatial Control

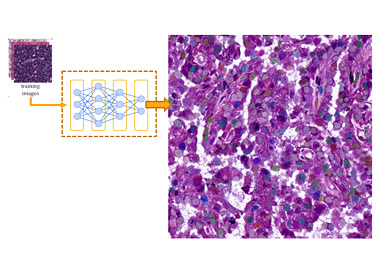

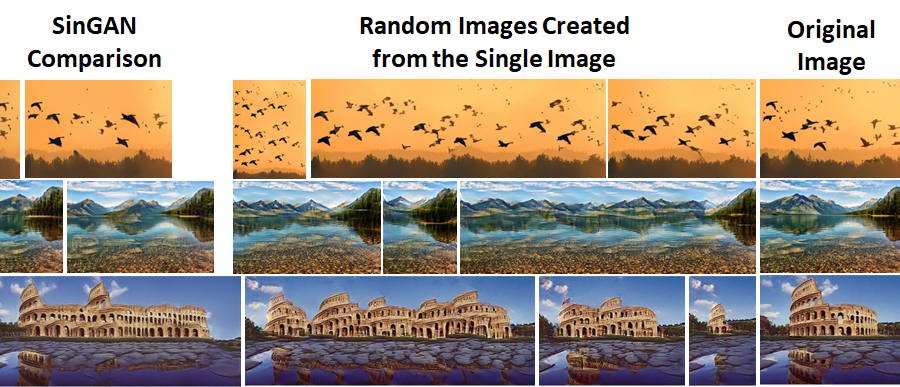

Generative Deep Features

Generative Deep Features

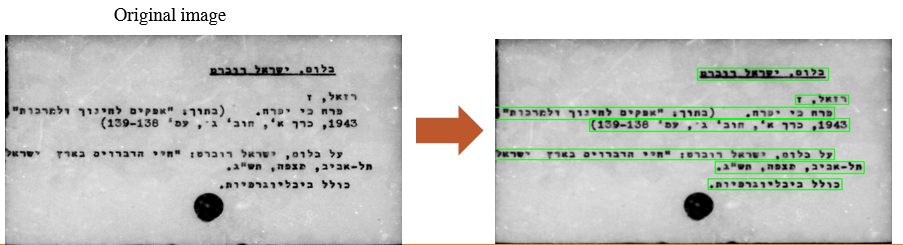

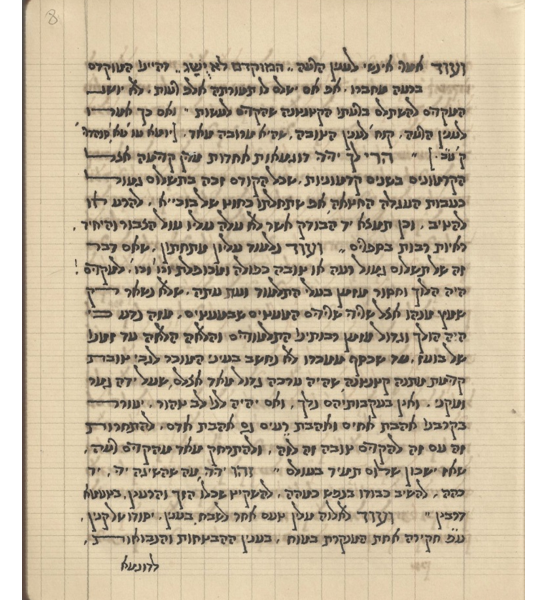

Optical Character Recognition (OCR) for Old Torah Manuscripts

Optical Character Recognition (OCR) for Old Torah Manuscripts

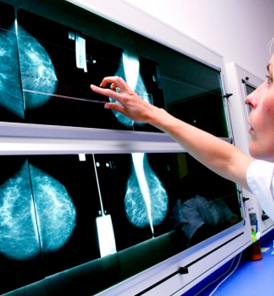

Early Detection of Cancer Using Thermal Video Analysis

Early Detection of Cancer Using Thermal Video Analysis

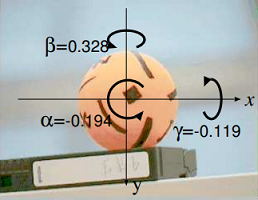

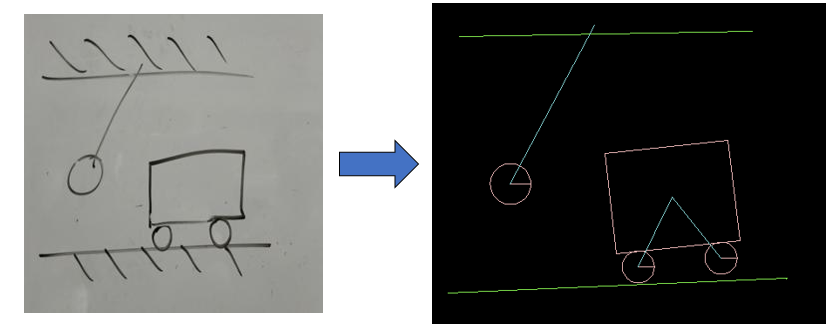

Physics Classroom Augmented Reality with Your Smartphone Part B

Physics Classroom Augmented Reality with Your Smartphone Part B

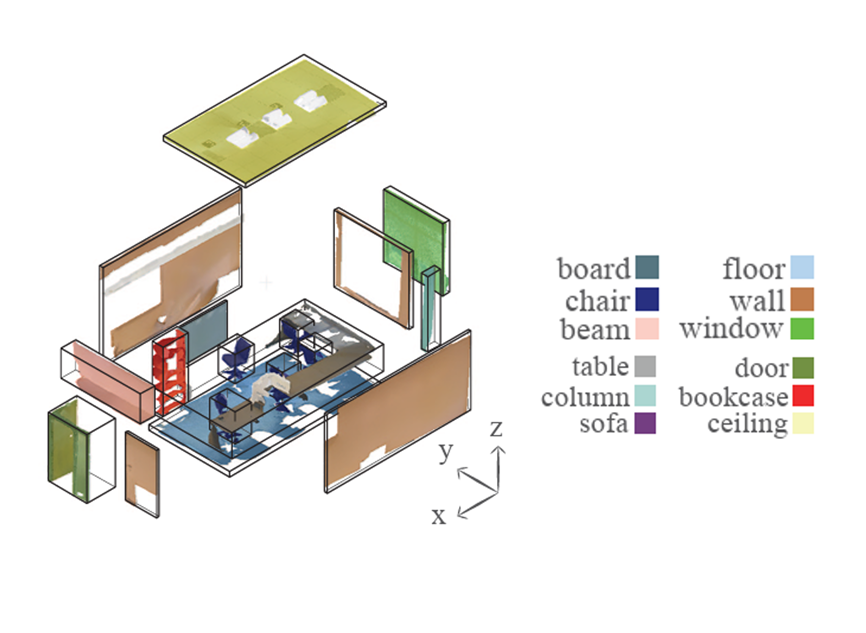

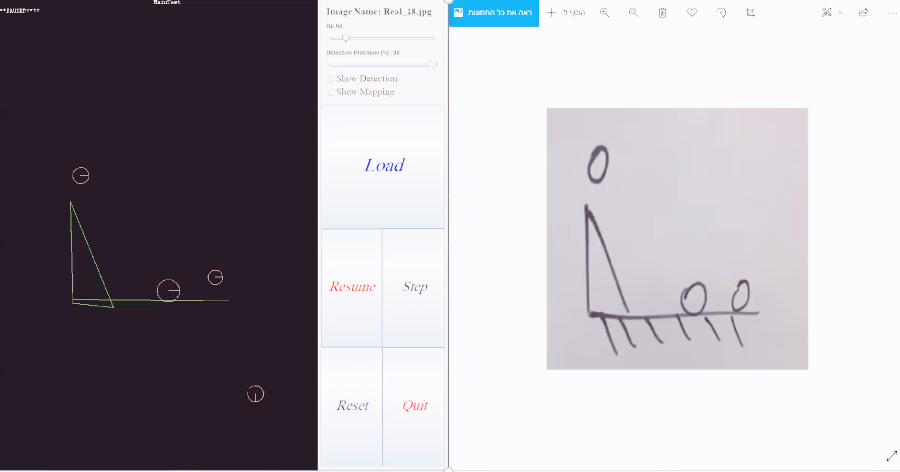

Deep Learning for Physics Classroom Augmented Reality App

Deep Learning for Physics Classroom Augmented Reality App

Efficient Deep Learning for Pedestrian Traffic Light Recognition

Efficient Deep Learning for Pedestrian Traffic Light Recognition

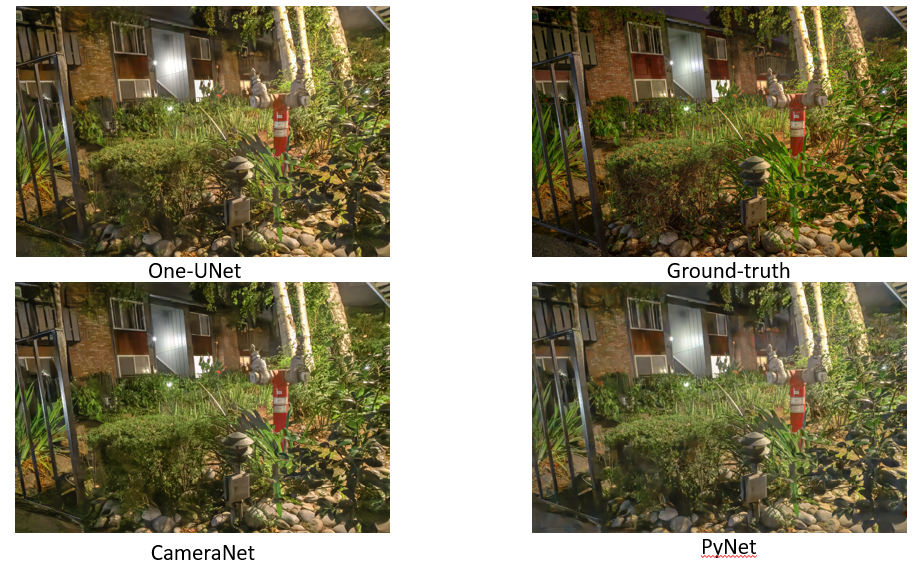

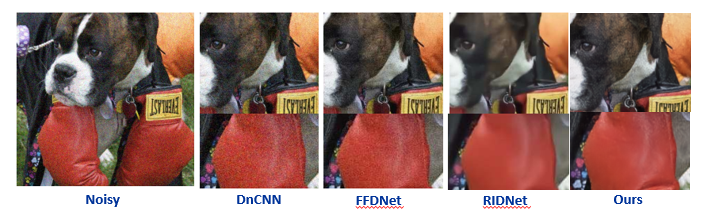

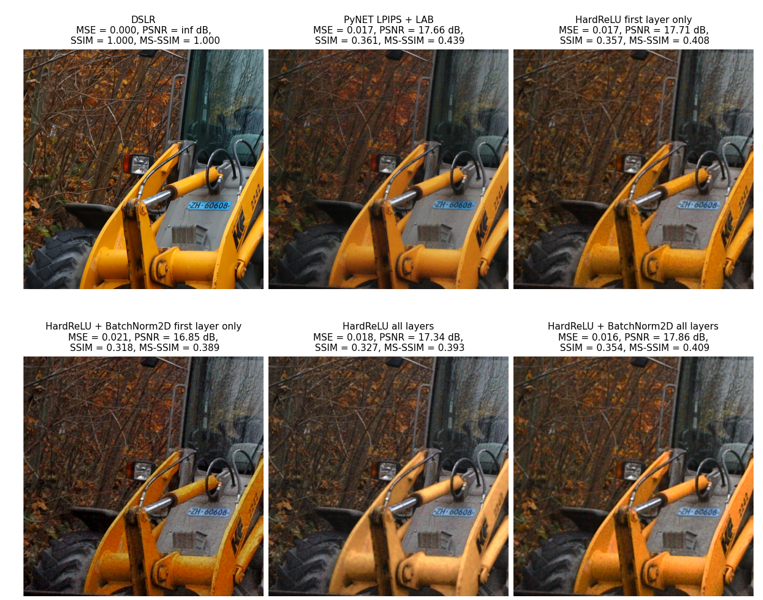

From Deep Features To Image Restoration

From Deep Features To Image Restoration

Relative Camera Pose Estimation Using Smartphone Cameras and Sensors

Relative Camera Pose Estimation Using Smartphone Cameras and Sensors

Identification Of Content In Images Of Promotional Brochures

Identification Of Content In Images Of Promotional Brochures

Detection and Localization of Cumulonimbus Clouds in Satellite Images

Detection and Localization of Cumulonimbus Clouds in Satellite Images

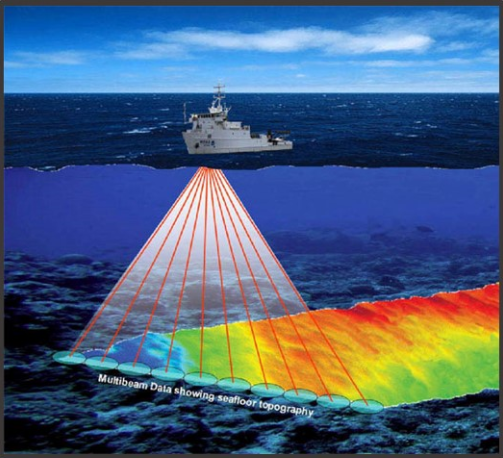

Anomaly Detection in Multibeam Echosounder Seabed Scans

Anomaly Detection in Multibeam Echosounder Seabed Scans

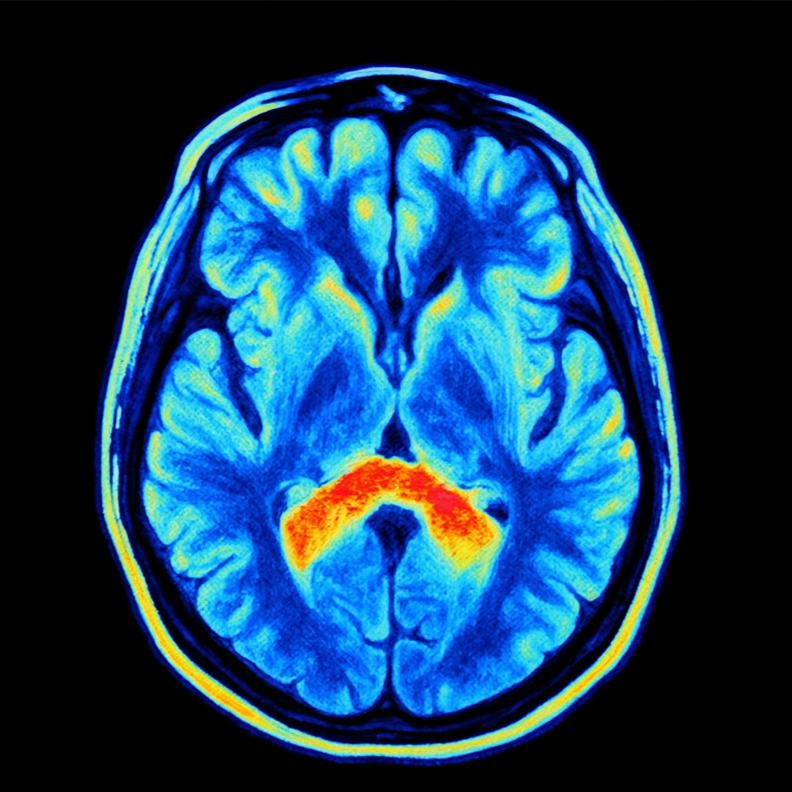

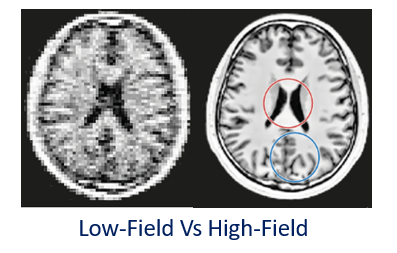

Predicting the Existence of Dyslexia in Children Using fMRI

Predicting the Existence of Dyslexia in Children Using fMRI

Objects Removal from Crowded Image

Objects Removal from Crowded Image

Robust Underwater Image Compression

Robust Underwater Image Compression

Fast High Efficiency Video Coding (HEVC)

Fast High Efficiency Video Coding (HEVC)

Distance Estimation of Marine Vehicles

Distance Estimation of Marine Vehicles

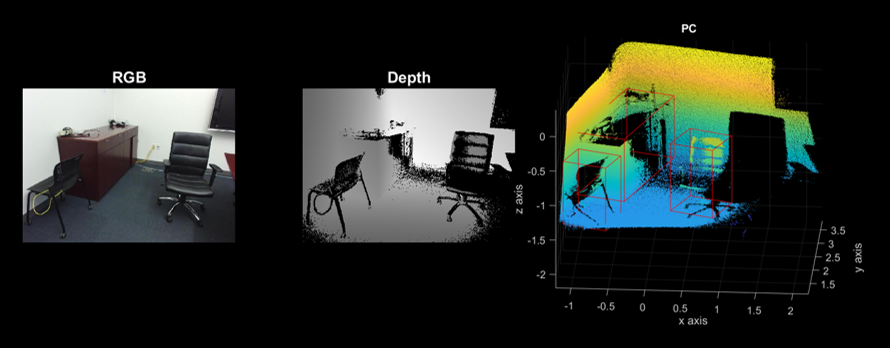

Motion Analysis Using Kinect for Monitoring Parkinson's Disease

Motion Analysis Using Kinect for Monitoring Parkinson's Disease

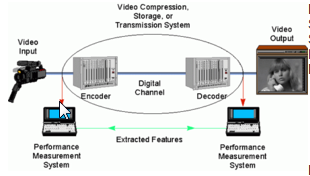

Video Quality Assessment Prototype System

Video Quality Assessment Prototype System

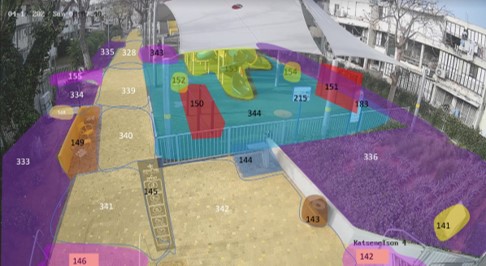

Augmented Reality Pinball

Augmented Reality Pinball

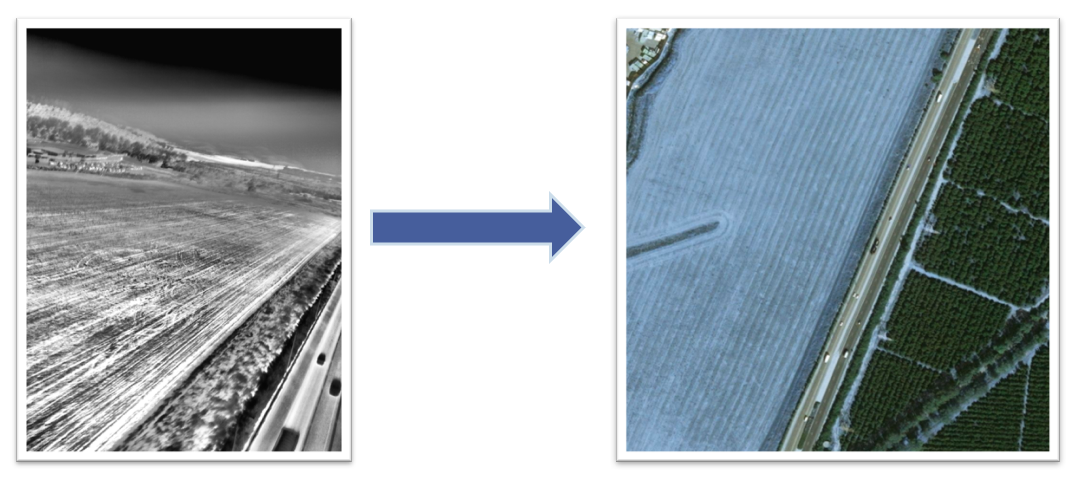

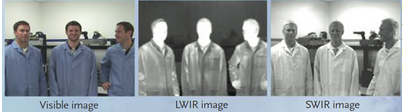

Tone Mapping of SWIR Images

Tone Mapping of SWIR Images

Change Detection of Cars in a Parking Lot

Change Detection of Cars in a Parking Lot

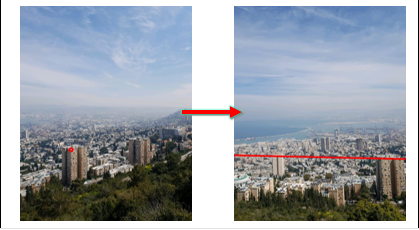

Real Time Airborne Video Stabilization

Real Time Airborne Video Stabilization

Determining Image Origin & Integrity Using Sensor Noise

Determining Image Origin & Integrity Using Sensor Noise

Image Quality Assessment Based On DCT Subband Similarity

Image Quality Assessment Based On DCT Subband Similarity

Cast Shadow Detection in Images & Video

Cast Shadow Detection in Images & Video

Feather Color Analysis for the Barn Owl

Feather Color Analysis for the Barn Owl

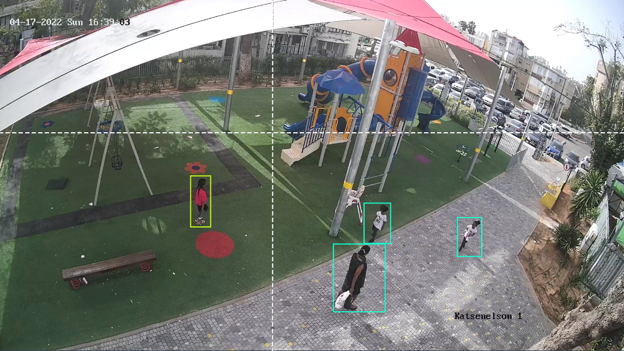

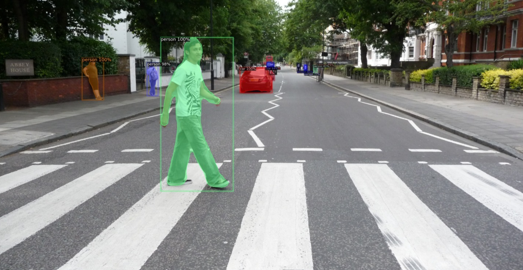

Real-Time Pedestrian Detection and Tracking

Real-Time Pedestrian Detection and Tracking

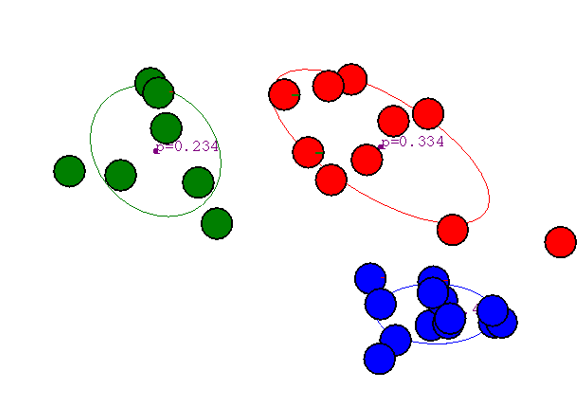

Object Reidentification in Video Using Multiple Cameras

Object Reidentification in Video Using Multiple Cameras

Video Packet Loss Concealment Detection Based On Image Content

Video Packet Loss Concealment Detection Based On Image Content

Detecting Added Markers and Notes on Printed Text

Detecting Added Markers and Notes on Printed Text

Real-Time Pedestrian Motion Detection & Tracking

Real-Time Pedestrian Motion Detection & Tracking

Image Reconstruction from Plenoptic Camera

Image Reconstruction from Plenoptic Camera

Tracking People Using Video Images and Active Contours

Tracking People Using Video Images and Active Contours

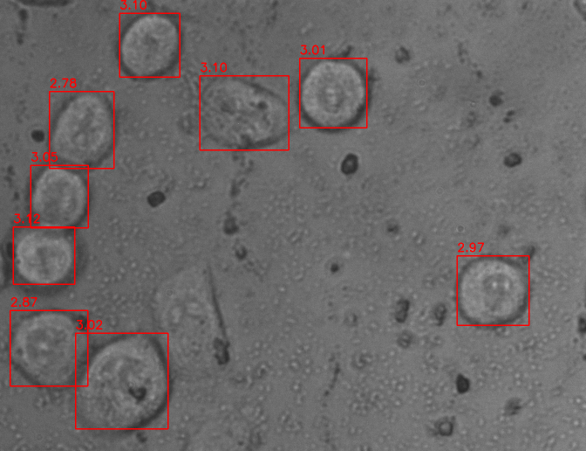

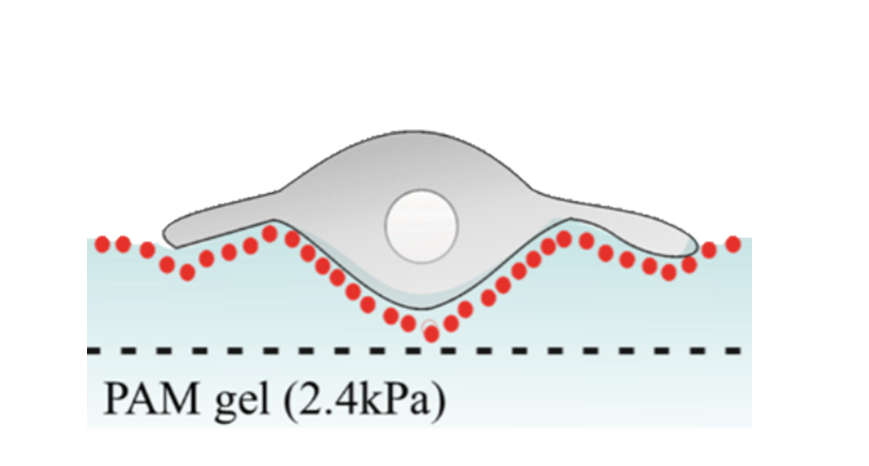

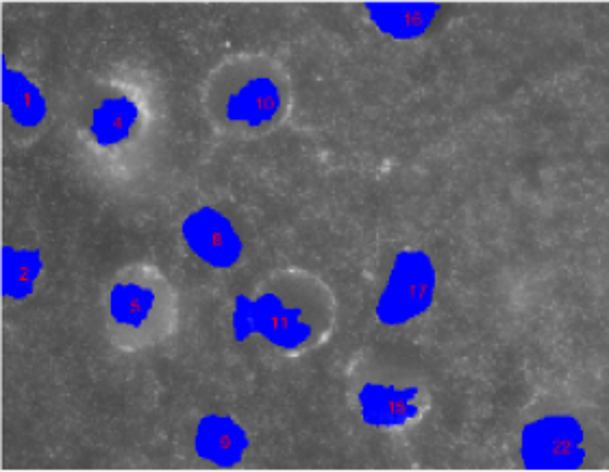

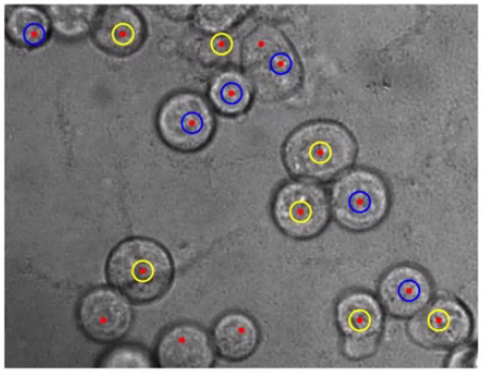

Intravascular Ultrasound Image Analysis

Intravascular Ultrasound Image Analysis

Lens Motor Noise Reduction For Digital Camera

Lens Motor Noise Reduction For Digital Camera

Face Detection in Video

Face Detection in Video

Fractional Zoom For Images

Fractional Zoom For Images

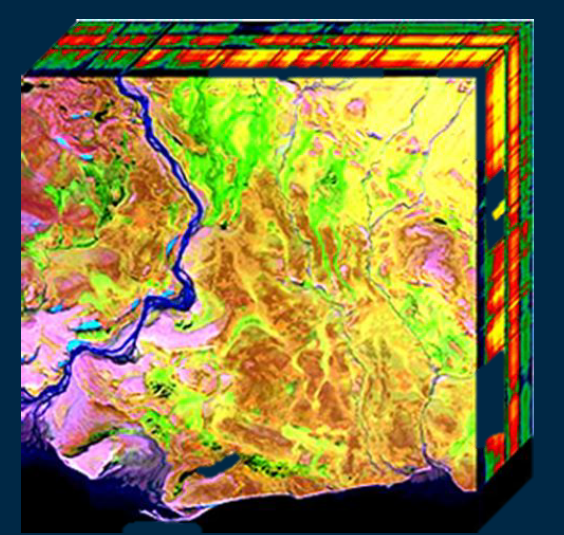

Anomaly Detection in Hyperspectral Images, Part A+B

Anomaly Detection in Hyperspectral Images, Part A+B

Lossless Compression of Images Using Adaptive Scan

Lossless Compression of Images Using Adaptive Scan

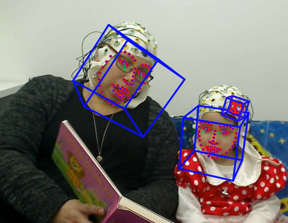

Automatic Detection of Location and Face Orientation

Automatic Detection of Location and Face Orientation

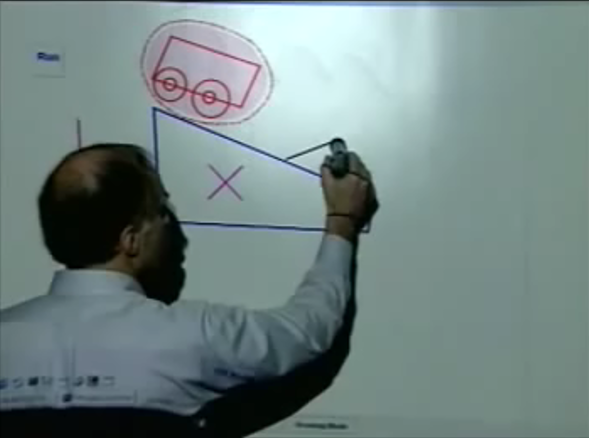

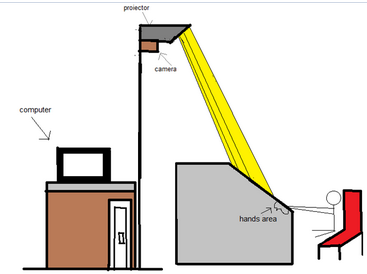

Real-Time Lecturer Tracking

Real-Time Lecturer Tracking

Representing Images for Video Scene

Representing Images for Video Scene

Progressive Coding of Color Images - Part B - Compression

Progressive Coding of Color Images - Part B - Compression

Digital Video Protection for Authenticity Verification

Digital Video Protection for Authenticity Verification

Low bit rate video compression with QuadTree using Adaptive Quantization

Low bit rate video compression with QuadTree using Adaptive Quantization

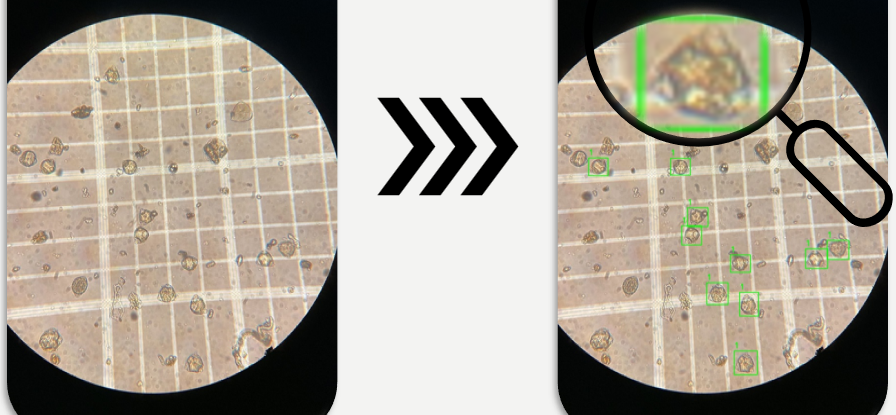

Wafer Images Compression Using Wavelets

Wafer Images Compression Using Wavelets

Editing of VCD MPEG1 Files

Editing of VCD MPEG1 Files

Compression of Text Images Using Binary Images

Compression of Text Images Using Binary Images